In the realm of artificial intelligence, neural networks have emerged as powerful tools for solving complex problems. Two prominent architectures within the neural network landscape are Long Short-Term Memory (LSTM) networks and Convolutional Neural Networks (CNNs). While both excel in specific domains, their underlying mechanisms and applications differ significantly.

LSTMs: Mastering Sequential Data

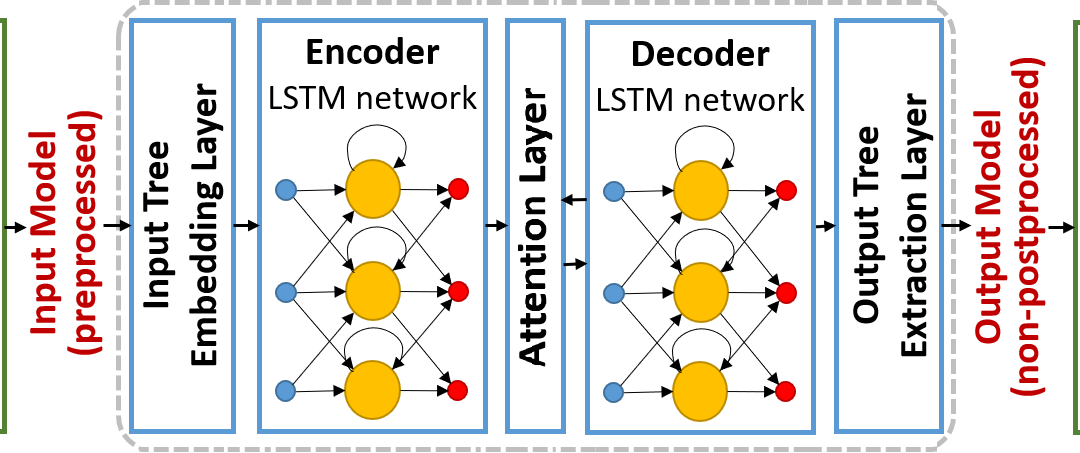

LSTMs, a type of recurrent neural network (RNN), are designed to handle sequential data, such as time series data and natural language processing (NLP) tasks. Their unique architecture enables them to capture long-term dependencies within sequences, making them well-suited for tasks like machine translation, speech recognition, and sentiment analysis.

At the heart of an LSTM lies a memory cell, a carefully crafted gate system that regulates the flow of information through the network. This gate system allows LSTMs to selectively remember and forget information over time, enabling them to effectively process long sequences.

CNNs: Capturing Spatial Patterns

CNNs, on the other hand, shine when dealing with grid-like data, particularly images. Their architecture is inspired by the human visual system, emphasizing local connections and feature extraction. By convolving filters over the input data, CNNs effectively capture spatial patterns and relationships within images.

CNNs have revolutionized image recognition and classification tasks, achieving remarkable results in tasks like object detection, image segmentation, and facial recognition. Their ability to extract hierarchical features from images makes them indispensable tools in computer vision.

LSTMs vs. CNNs: A Tale of Two Strengths

LSTMs and CNNs excel in their respective domains, each offering unique strengths. LSTMs excel in modeling temporal dependencies, making them well-suited for sequential data processing. CNNs, on the other hand, excel in extracting spatial features, making them ideal for image and video analysis.

Applications of LSTMs

Machine translation: LSTMs can effectively capture the nuances of language and translate between languages accurately.

Speech recognition: LSTMs can process speech signals and convert them into text, enabling voice assistants and dictation tools.

Sentiment analysis: LSTMs can analyze social media posts, reviews, and other text data to gauge public opinion and sentiment.

Applications of CNNs

Image classification: CNNs can identify and classify objects in images with remarkable accuracy, powering image search engines and autonomous vehicles.

Object detection: CNNs can locate and identify specific objects within images, enabling applications like security systems and traffic monitoring.

Image segmentation: CNNs can segment images into distinct regions, enabling tasks like medical imaging analysis and self-driving cars.

Choosing the Right Neural Network

The choice between LSTMs and CNNs depends on the nature of the data and the task at hand. For sequential data processing, LSTMs are the natural choice. For grid-like data analysis, CNNs reign supreme.

In recent years, hybrid architectures that combine LSTMs and CNNs have emerged, leveraging the strengths of both to tackle complex problems. These hybrid models have demonstrated promising results in areas like video captioning and sentiment analysis of visual content.

Conclusion

LSTMs and CNNs represent two powerful tools in the neural network arsenal, each tailored for specific data types and applications. Understanding their strengths and limitations is crucial for effectively tackling a wide range of machine learning challenges.

In summary, LSTMs are well-suited for sequential data processing, while CNNs excel in analyzing grid-like data. The choice between the two depends on the nature of the problem and the available data.